TOC

本文Istio集群针对istio1.16版本,istio1.18之后的集群架构参考:https://www.ipyker.com/post/istiod-mutli-primary/

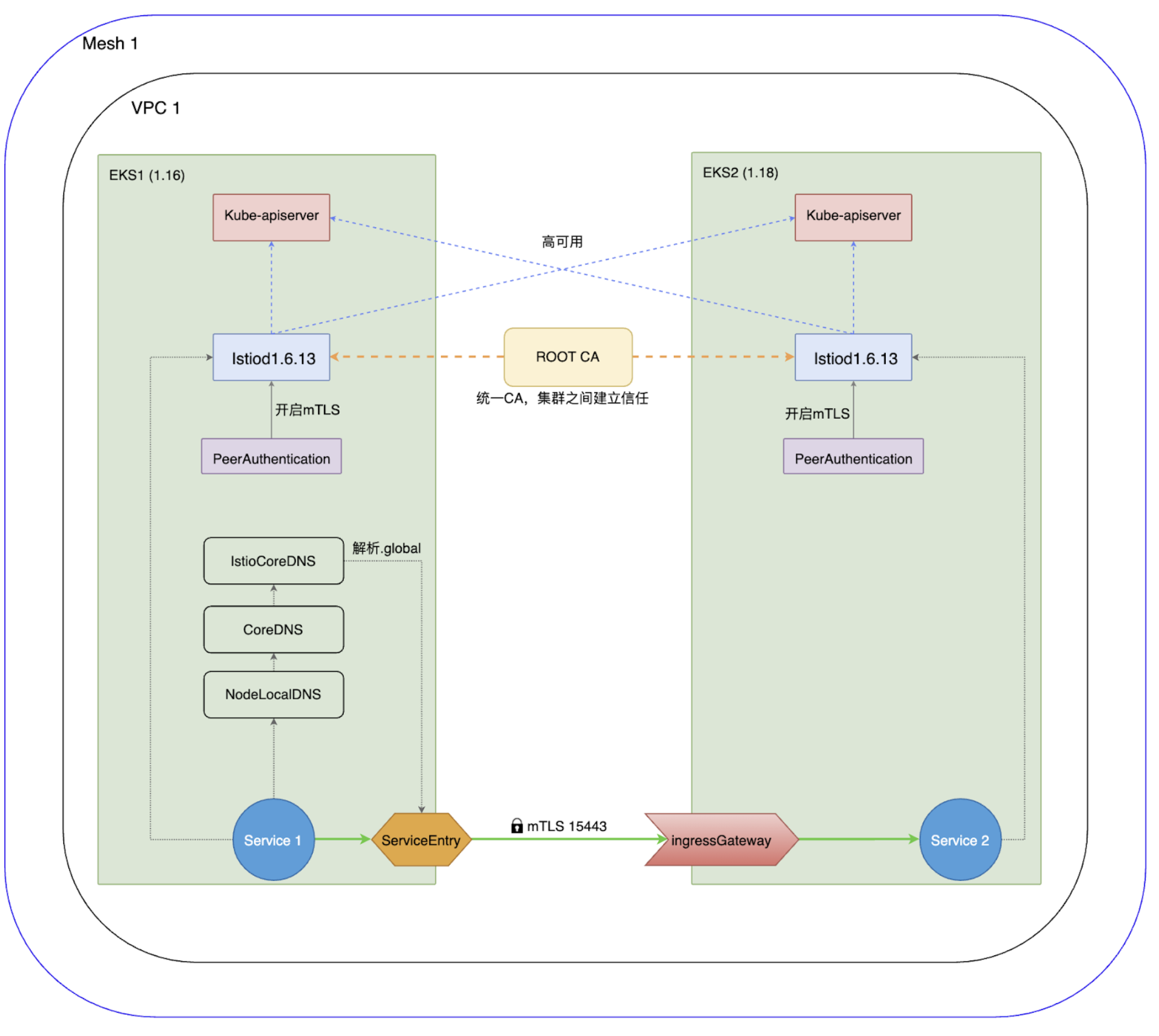

多主模式架构

架构解析

- Istiod使用共同ROOT CA签发的中间证书来安装Istiod,从而确认彼此之间的信任关系。

- 安装Isitod时需要安装istiocoredns组件,通过它来解析.global后缀的跨集群服务。

- 通过serviceentry使服务会基于双向mTLS连接,集群1上对service2的访问会路由至集群2的ingressgataway的15443端口。15443是一个特殊的 SNI-aware Envoy,进入 15443 端口的流量会为目标集群内适当的服务的 pods 提供负载均衡。

部署前准备

- 准备好2个Kubernetes集群,注意两个集群的apiserver需要能互相访问

- 准备好一份CA证书,可以是openssl自签发的。

- 本文准备了两个Kubernetes集群,名称分别为

istio-cluster-001和istio-cluster-002

当前操作主机拥有两个集群的权限,因此有些公共资源部分省去了copy后在apply的步骤

部署证书

在安装istio之前,需要先部署一个cacerts的secret资源。可以使用openssl生成ca证书和中间证书。然后部署到k8s,包括所有输入文件ca-cert.pem,ca-key.pem, root-cert.pem和cert-chain.pem

# istio-cluster-001 部署证书

$ kubectl --kubeconfig=/root/.kube/istio-cluster-001 create namespace istio-system

$ kubectl --kubeconfig=/root/.kube/istio-cluster-001 create secret generic cacerts -n istio-system \

--from-file=./ca-cert.pem \

--from-file=./ca-key.pem \

--from-file=./root-cert.pem \

--from-file=./cert-chain.pem

# istio-cluster-002 部署证书

$ kubectl --kubeconfig=/root/.kube/istio-cluster-002 create namespace istio-system

$ kubectl --kubeconfig=/root/.kube/istio-cluster-002 create secret generic cacerts -n istio-system \

--from-file=./ca-cert.pem \

--from-file=./ca-key.pem \

--from-file=./root-cert.pem \

--from-file=./cert-chain.pem

此证书已经生成好的,两个集群可以用同一个中间证书,也可以用ROOT CA分别签发两个集群的证书,详细步骤参考:https://istio.io/latest/docs/tasks/security/cert-management/plugin-ca-cert/

安装Istiod

准备IstioOperator安装配置文件,集群1和集群2参考下面配置,注意注释部分中的解释。

本文只涉及到Istiod集群相关配置,可观测组件未配置。

apiVersion: install.istio.io/v1alpha1

kind: IstioOperator

spec:

addonComponents:

grafana:

enabled: false

k8s:

replicaCount: 1

istiocoredns: # 必须开启istiocoredns,跨集群需要

enabled: true

kiali:

enabled: false

k8s:

replicaCount: 1

prometheus:

enabled: false

k8s:

replicaCount: 1

tracing:

enabled: false

components:

base:

enabled: true

citadel:

enabled: false

k8s:

strategy:

rollingUpdate:

maxSurge: 100%

maxUnavailable: 25%

cni:

enabled: false

egressGateways:

- enabled: true

k8s:

env:

- name: ISTIO_META_ROUTER_MODE

value: sni-dnat

hpaSpec:

maxReplicas: 5

metrics:

- resource:

name: cpu

targetAverageUtilization: 80

type: Resource

minReplicas: 1

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: istio-egressgateway

resources:

limits:

cpu: 2000m

memory: 1024Mi

requests:

cpu: 100m

memory: 128Mi

service:

ports:

- name: http2

port: 80

targetPort: 8080

- name: https

port: 443

targetPort: 8443

- name: tls

port: 15443

targetPort: 15443

strategy:

rollingUpdate:

maxSurge: 100%

maxUnavailable: 25%

name: istio-egressgateway

ingressGateways: # 必须ingressgateway,集群需要

- enabled: true

k8s:

env:

- name: ISTIO_META_ROUTER_MODE

value: sni-dnat

hpaSpec:

maxReplicas: 5

metrics:

- resource:

name: cpu

targetAverageUtilization: 80

type: Resource

minReplicas: 1

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: istio-ingressgateway

resources:

limits:

cpu: 2000m

memory: 1024Mi

requests:

cpu: 100m

memory: 128Mi

service:

ports:

- name: status-port

port: 15021

targetPort: 15021

- name: http2

port: 80

targetPort: 8080

- name: https

port: 443

targetPort: 8443

- name: tls

port: 15443

targetPort: 15443

strategy:

rollingUpdate:

maxSurge: 100%

maxUnavailable: 25%

name: istio-ingressgateway

istiodRemote:

enabled: false

pilot:

enabled: true

k8s:

env:

- name: POD_NAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

readinessProbe:

httpGet:

path: /ready

port: 8080

initialDelaySeconds: 1

periodSeconds: 3

timeoutSeconds: 5

strategy:

rollingUpdate:

maxSurge: 100%

maxUnavailable: 25%

policy:

enabled: false

k8s:

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

hpaSpec:

maxReplicas: 5

metrics:

- resource:

name: cpu

targetAverageUtilization: 80

type: Resource

minReplicas: 1

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: istio-policy

strategy:

rollingUpdate:

maxSurge: 100%

maxUnavailable: 25%

telemetry:

enabled: false

k8s:

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

- name: GOMAXPROCS

value: "6"

hpaSpec:

maxReplicas: 5

metrics:

- resource:

name: cpu

targetAverageUtilization: 80

type: Resource

minReplicas: 1

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: istio-telemetry

replicaCount: 1

resources:

limits:

cpu: 4800m

memory: 4G

requests:

cpu: 1000m

memory: 1G

strategy:

rollingUpdate:

maxSurge: 100%

maxUnavailable: 25%

hub: docker.io/istio

meshConfig:

defaultConfig:

proxyMetadata: {}

enablePrometheusMerge: false

profile: default

tag: 1.6.13

values:

base:

validationURL: ""

clusterResources: true

gateways:

istio-egressgateway:

autoscaleEnabled: true

env:

ISTIO_META_REQUESTED_NETWORK_VIEW: "external" # 配置外部网络请求

name: istio-egressgateway

secretVolumes:

- mountPath: /etc/istio/egressgateway-certs

name: egressgateway-certs

secretName: istio-egressgateway-certs

- mountPath: /etc/istio/egressgateway-ca-certs

name: egressgateway-ca-certs

secretName: istio-egressgateway-ca-certs

type: ClusterIP

zvpn: {}

istio-ingressgateway:

applicationPorts: ""

autoscaleEnabled: true

debug: info

domain: ""

env: {}

meshExpansionPorts:

- name: tcp-pilot-grpc-tls

port: 15011

targetPort: 15011

- name: tcp-istiod

port: 15012

targetPort: 15012

- name: tcp-citadel-grpc-tls

port: 8060

targetPort: 8060

- name: tcp-dns-tls

port: 853

targetPort: 8853

name: istio-ingressgateway

secretVolumes:

- mountPath: /etc/istio/ingressgateway-certs

name: ingressgateway-certs

secretName: istio-ingressgateway-certs

- mountPath: /etc/istio/ingressgateway-ca-certs

name: ingressgateway-ca-certs

secretName: istio-ingressgateway-ca-certs

type: LoadBalancer

zvpn: {}

global:

arch:

amd64: 2

ppc64le: 2

s390x: 2

configValidation: true

controlPlaneSecurityEnabled: true # 控制平面TLS安全

defaultNodeSelector: {}

podDNSSearchNamespaces: # 配置dns搜索域为 .global

- global

defaultPodDisruptionBudget:

enabled: true

defaultResources:

requests:

cpu: 10m

enableHelmTest: false

imagePullPolicy: ""

imagePullSecrets: []

istioNamespace: istio-system

istiod:

enableAnalysis: false

enabled: true

jwtPolicy: third-party-jwt

logAsJson: false

logging:

level: default:info

meshExpansion:

enabled: false

useILB: false

meshNetworks: {}

mountMtlsCerts: false

multiCluster:

clusterName: "istio-cluster-001" # 分别填写两个集群的名字

enabled: true

globalDomainSuffix: "global" # 配置跨集群访问后缀

includeEnvoyFilter: true # 两个集群都必须配置且一样

network: "network1" # 两个集群都必须配置且一样

omitSidecarInjectorConfigMap: false

oneNamespace: false

operatorManageWebhooks: false

pilotCertProvider: istiod

priorityClassName: ""

proxy:

autoInject: enabled

clusterDomain: cluster.local

componentLogLevel: misc:error

enableCoreDump: false

envoyStatsd:

enabled: false

excludeIPRanges: ""

excludeInboundPorts: ""

excludeOutboundPorts: ""

image: proxyv2

includeIPRanges: '*'

logLevel: warning

privileged: false

readinessFailureThreshold: 30

readinessInitialDelaySeconds: 1

readinessPeriodSeconds: 2

resources:

limits:

cpu: 2000m

memory: 1024Mi

requests:

cpu: 100m

memory: 128Mi

statusPort: 15020

tracer: zipkin

proxy_init:

image: proxyv2

resources:

limits:

cpu: 100m

memory: 50Mi

requests:

cpu: 10m

memory: 10Mi

sds:

token:

aud: istio-ca

sts:

servicePort: 0

tracer:

datadog:

address: $(HOST_IP):8126

lightstep:

accessToken: ""

address: ""

stackdriver:

debug: false

maxNumberOfAnnotations: 200

maxNumberOfAttributes: 200

maxNumberOfMessageEvents: 200

zipkin:

address: ""

trustDomain: cluster.local

useMCP: false

grafana:

accessMode: ReadWriteMany

contextPath: /grafana

dashboardProviders:

dashboardproviders.yaml:

apiVersion: 1

providers:

- disableDeletion: false

folder: istio

name: istio

options:

path: /var/lib/grafana/dashboards/istio

orgId: 1

type: file

datasources:

datasources.yaml:

apiVersion: 1

env: {}

envSecrets: {}

image:

repository: grafana/grafana

tag: 6.7.4

nodeSelector: {}

persist: false

podAntiAffinityLabelSelector: []

podAntiAffinityTermLabelSelector: []

security:

enabled: false

passphraseKey: passphrase

secretName: grafana

usernameKey: username

service:

annotations: {}

externalPort: 3000

name: http

type: ClusterIP

storageClassName: ""

tolerations: []

istiocoredns:

coreDNSImage: coredns/coredns

coreDNSPluginImage: istio/coredns-plugin:0.2-istio-1.1

coreDNSTag: 1.6.2

istiodRemote:

injectionURL: ""

kiali:

contextPath: /kiali

createDemoSecret: false

dashboard:

auth:

strategy: login

grafanaInClusterURL: http://grafana:3000

jaegerInClusterURL: http://tracing/jaeger

passphraseKey: passphrase

secretName: kiali

usernameKey: username

viewOnlyMode: false

hub: quay.io/kiali

nodeSelector: {}

podAntiAffinityLabelSelector: []

podAntiAffinityTermLabelSelector: []

security:

cert_file: /kiali-cert/cert-chain.pem

enabled: false

private_key_file: /kiali-cert/key.pem

service:

annotations: {}

tag: v1.18

mixer:

adapters:

kubernetesenv:

enabled: true

prometheus:

enabled: true

metricsExpiryDuration: 10m

stackdriver:

auth:

apiKey: ""

appCredentials: false

serviceAccountPath: ""

enabled: false

tracer:

enabled: false

sampleProbability: 1

stdio:

enabled: false

outputAsJson: false

useAdapterCRDs: false

policy:

adapters:

kubernetesenv:

enabled: true

useAdapterCRDs: false

autoscaleEnabled: true

image: mixer

sessionAffinityEnabled: false

telemetry:

autoscaleEnabled: true

env:

GOMAXPROCS: "6"

image: mixer

loadshedding:

latencyThreshold: 100ms

mode: enforce

nodeSelector: {}

podAntiAffinityLabelSelector: []

podAntiAffinityTermLabelSelector: []

replicaCount: 1

sessionAffinityEnabled: false

tolerations: []

pilot:

appNamespaces: []

autoscaleEnabled: true

autoscaleMax: 5

autoscaleMin: 1

configMap: true

configNamespace: istio-config

cpu:

targetAverageUtilization: 80

enableProtocolSniffingForInbound: true

enableProtocolSniffingForOutbound: true

env: {}

image: pilot

keepaliveMaxServerConnectionAge: 30m

nodeSelector: {}

podAntiAffinityLabelSelector: []

podAntiAffinityTermLabelSelector: []

policy:

enabled: false

replicaCount: 1

tolerations: []

traceSampling: 1

prometheus:

contextPath: /prometheus

hub: docker.io/prom

nodeSelector: {}

podAntiAffinityLabelSelector: []

podAntiAffinityTermLabelSelector: []

provisionPrometheusCert: true

retention: 6h

scrapeInterval: 15s

security:

enabled: true

tag: v2.15.1

tolerations: []

sidecarInjectorWebhook:

enableNamespacesByDefault: false

injectLabel: istio-injection

objectSelector:

autoInject: true

enabled: false

rewriteAppHTTPProbe: true

telemetry:

enabled: true

v1:

enabled: false

v2:

enabled: true

metadataExchange: {}

prometheus:

enabled: true

stackdriver:

configOverride: {}

enabled: false

logging: false

monitoring: false

topology: false

tracing:

jaeger:

accessMode: ReadWriteMany

hub: docker.io/jaegertracing

memory:

max_traces: 50000

persist: false

spanStorageType: badger

storageClassName: ""

tag: "1.16"

nodeSelector: {}

opencensus:

exporters:

stackdriver:

enable_tracing: true

hub: docker.io/omnition

resources:

limits:

cpu: "1"

memory: 2Gi

requests:

cpu: 200m

memory: 400Mi

tag: 0.1.9

podAntiAffinityLabelSelector: []

podAntiAffinityTermLabelSelector: []

provider: jaeger

service:

annotations: {}

externalPort: 9411

name: http-query

type: ClusterIP

zipkin:

hub: docker.io/openzipkin

javaOptsHeap: 700

maxSpans: 500000

node:

cpus: 2

probeStartupDelay: 10

queryPort: 9411

resources:

limits:

cpu: 1000m

memory: 2048Mi

requests:

cpu: 150m

memory: 900Mi

tag: 2.20.0

version: ""

安装Isitod

$ istioctl --kubeconfig=/root/.kube/istio-cluster-001 install -f istio-cluster-001-iop.yaml

$ istioctl --kubeconfig=/root/.kube/istio-cluster-002 install -f istio-cluster-002-iop.yaml

新增coredns支持.global解析

# 新增如下配置到Corefile中。

$ kubectl --kubeconfig=/root/.kube/istio-cluster-001 edit cm coredns -n kube-system

global:53 {

errors

cache 30

forward . $(kubectl get svc -n istio-system istiocoredns -o jsonpath={.spec.clusterIP})

}

$ kubectl --kubeconfig=/root/.kube/istio-cluster-002 edit cm coredns -n kube-system

global:53 {

errors

cache 30

forward . $(kubectl get svc -n istio-system istiocoredns -o jsonpath={.spec.clusterIP})

}

部署服务验证

目标:将在istio-cluster-002集群部署httpbin服务和在istio-cluster-001集群部署sleep服务用于请求httpbin服务。要求够正常解析和返回请求结果。

部署sleep和helloworld的pod步骤略。。。 参考此连接完成环境部署:https://istio.io/v1.6/zh/docs/setup/install/multicluster/gateways/#configure-application-services

创建ServiceEntry资源

$ cat <<EOF | kubectl --kubeconfig=/root/.kube/istio-cluster-001 apply -f -

apiVersion: networking.istio.io/v1alpha3

kind: ServiceEntry

metadata:

name: httpbin-bar

namespace: foo

spec:

hosts:

- httpbin.bar.global

location: MESH_INTERNAL

ports:

- name: http1

number: 8000

protocol: http

resolution: DNS

addresses:

- 240.0.0.2

endpoints:

- address: ad76555a55ec045daaf71ffbf9c1db1c-667878442.ap-southeast-1.elb.amazonaws.com

ports:

http1: 15443

EOF

进入sleep容器验证

$ kubectl --kubeconfig=/root/.kube/istio-cluster-001 exec -it sleep-f8cbf5b76-m2pxl -n foo -c sleep -- sh

/ # curl httpbin.bar.global:8000 -I

HTTP/1.1 200 OK

server: envoy

date: Sun, 25 Apr 2021 07:57:24 GMT

content-type: text/html; charset=utf-8

content-length: 9593

access-control-allow-origin: *

access-control-allow-credentials: true

x-envoy-upstream-service-time: 7

结论

-

跨集群的服务访问必须通过.global的后缀来实现,如两个集群相同namespace下存在相同服务pod和svc,使用svc.namespace和svc.namespace.global有本质区别。

-

集群支持高可用,当一个集群的istiod挂掉后,istiocoredns依然能解析服务。

-

请求方跨集群调度时,需配置被调方的Serviceentry配置。暂时不知是否支持全局配置。

-

不管集群1访问集群2还是集群2访问集群1都需要serviceentry的配置支持。

-

相比istio1.9.2在应用请求调度方面复杂不少。

「真诚赞赏,手留余香」

真诚赞赏,手留余香

使用微信扫描二维码完成支付

comments powered by Disqus